Getting Started

1. The Application Scenario

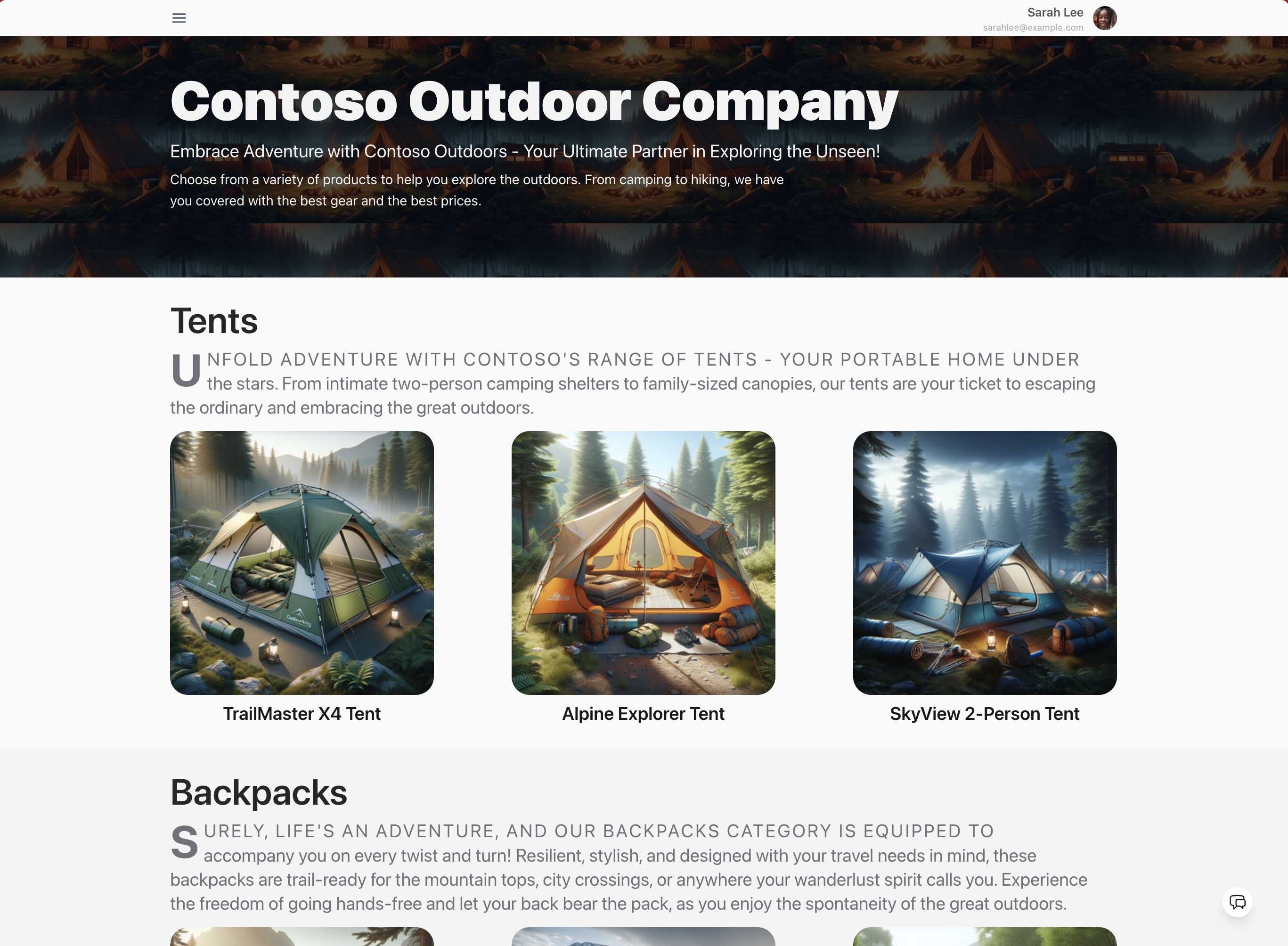

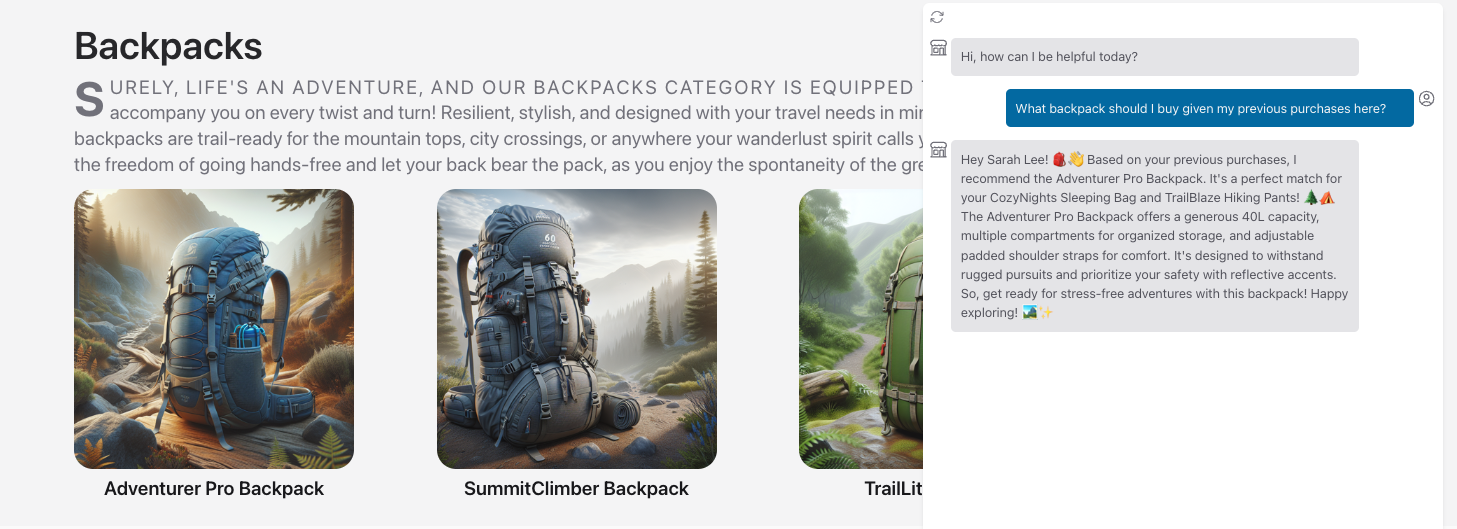

Contoso Outdoor is a fictitious company that sells hiking and camping gear to outdoor adventure enthusiasts. That have multiple product categories, multiple items per category, and extensive information for each item - making it difficult for customers to quickly discover items or information on the site and potentially losing revenue. The company decides to invest in building a retail copilot solution that is integrated as a customer service chatbot (AI assistant) in their website.

Customers on the site can now click the chat icon and ask the copilot questions in natural language, and receive answers about items in the product catalog, or get recommendations based on their previous purchases. The retail copilot solution uses a retrieval augmented generation (RAG) architecture to ensure that responses are grounded in the company’s product and customer data.

2. The RAG Architecture

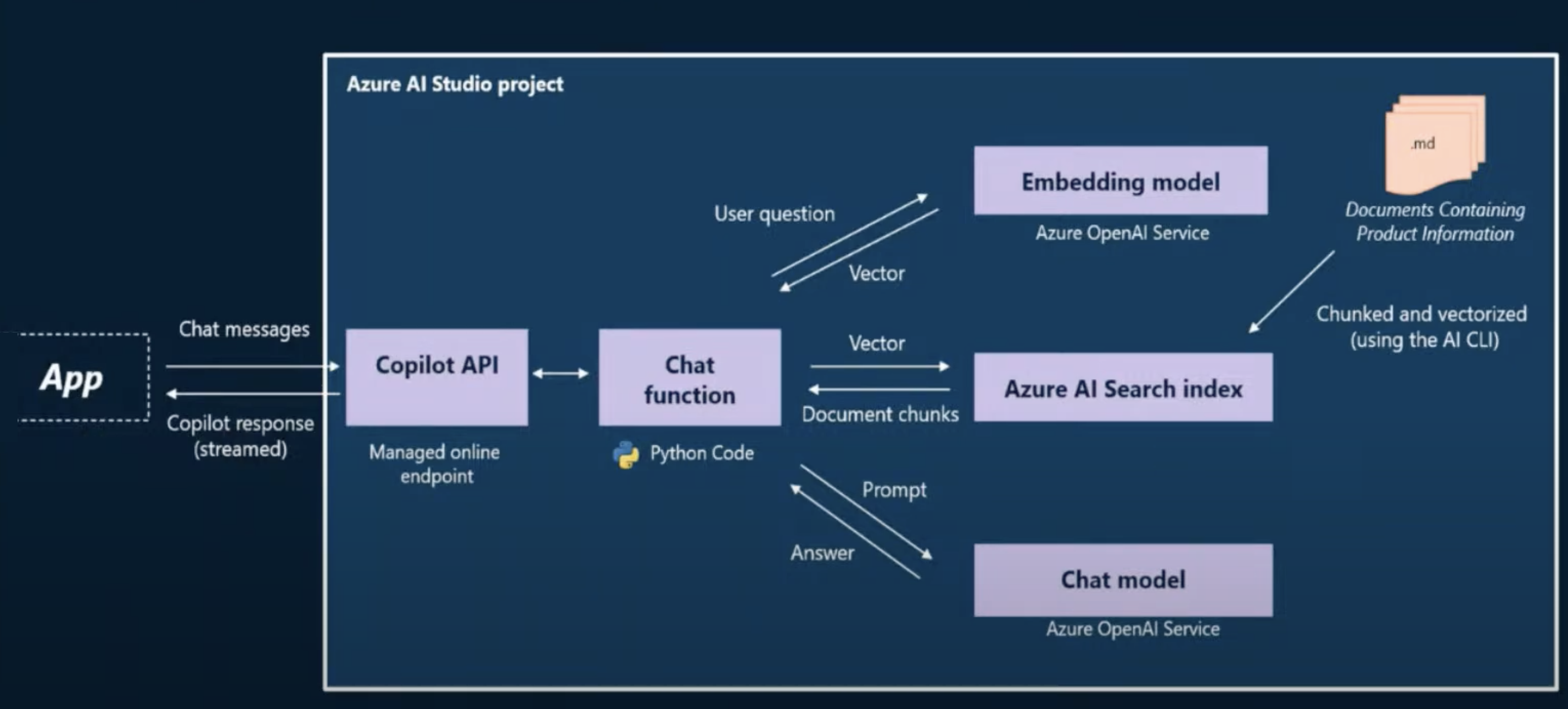

The retrieval augmented generation approach follows the workflow shown in the fiture below:

- Customer requests in the website (App frontend) are sent to the Copilot (API backend) and processed by our copilot chat implementation.

- The request (natural language) is converted into a numeric representation (vector query) using an Azure OpenAI embedding model.

- The vectorized query is then used for retrieval of matching products from the product index maintained in Azure AI Search.

- The resulting matches are used to augment the user query, sending an enhanced prompt to the Azure OpenAI chat completion model.

- The model’s response is then returned to the user and displayed in the web app.

The architecture emphasizes four aspects. First, we need to provision resources to store the customer purchase history (database) and company product catalog (search indexes). Second, we need to deploy models (embedding, chat completion) that we can invoke during this interaction flow. Third, we need orchestration tools to coordinate the various interactions that need to occur between the receipt of the user request (prompt) and the returning of the final response (answer). And finally, we need to deploy the copilot to get a hosted endpoint accessible to the web app.

3. The Paradigm Shift

But there’s more. Because we deal with natural language (inputs, outputs), we need new tools and metholodologies to evaluate responses for quality and efficiency. This includes ensuring responsible AI operation that detects and mitigates threats like jailbreaking, and protects against harmful content that violates desired safety guidelines.

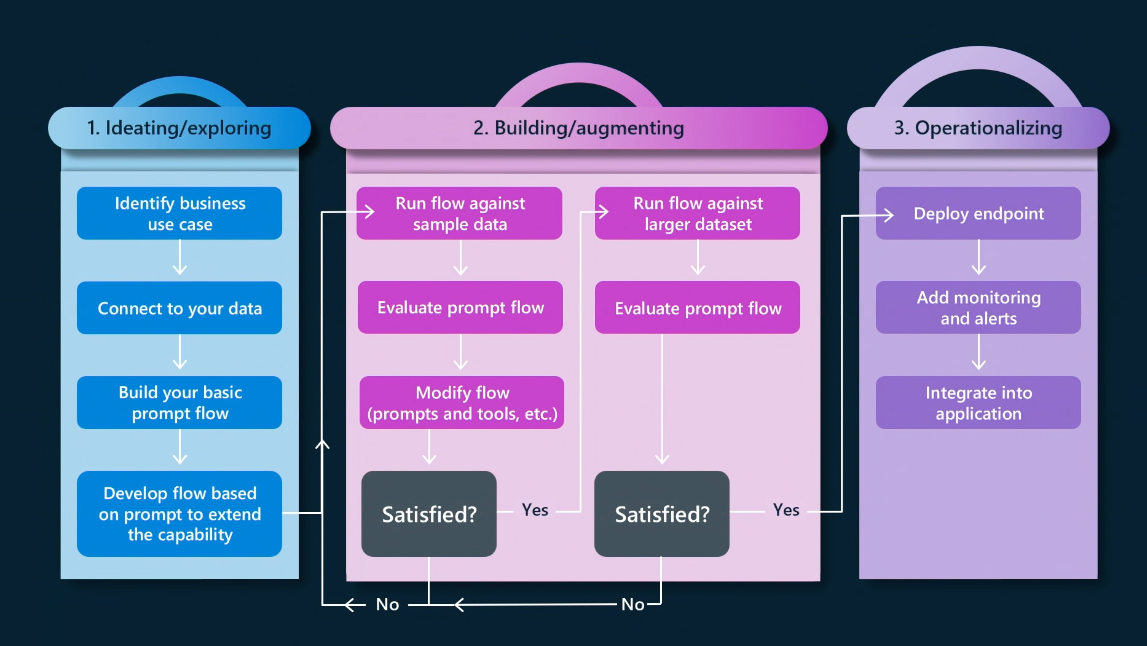

This is creating a paradigm shift from traditional MLOps application lifecycles (for predictive AI) to new LLMOps lifecycles (for generative AI). We now need tools that can streamline end-to-end workflows from design (ideation) to development (evaluation & iteration) and deployment (operationalization) - and simplify critical processes from model selection, to resource provisioning, and application monitoring.

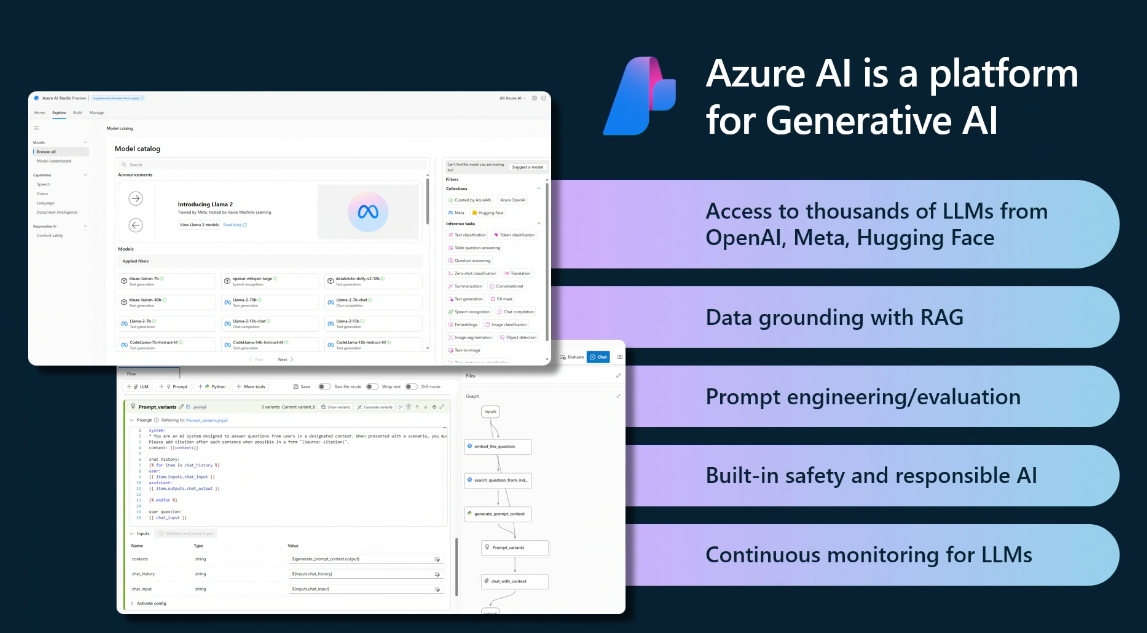

4. The Azure AI Platform

This is where the Azure AI Platform comes into the picture. Azure AI Studio is the unified platform for building generative AI solutions on Azure. With Azure AI Studio, developers can explore and select models from the Model Hub, manage their model deployments with AI hub resources and build & deploy applications with AI project resources. And they can do this using Azure AI Studio UI experience (low-code) or with the Azure AI SDK (code-first).

For our retail copilot solution, we will adopt the code-first approach, using relevant tools & processes to build, evaluate, deploy, and test the Contoso Chat application on Azure AI.